Practical Embedded Intelligence

Enabling optimized edge AI inference performance, system power and cost

Our blueprints, software and tools for edge AI let you effectively

balance deep

learning performance with system power and cost. We offer a

practical embedded

inference solutions for next-generation vehicles, smart cameras,

edge AI boxes, and

autonomous machines and robots. In addition to general compute and

deep learning

cores, our blueprints for edge AI integrate imaging, vision,

multimedia cores and

security enablers and optional microcontrollers for applications

that require SIL-3

and ASIL-D functional safety certifications.

Explore

- High-speed, low-latency embedded AI

- High throughput per TOPS

- Low-power consumption

Discover blueprints for edge AI.

Evaluate

- Access to boards through the cloud

- Deep learning benchmarks in minutes

- Pre-trained models and AI tools

Start your deep learning evaluation.

Develop

- Industry-standard APIs

- Low-cost starter kits

- 24/7 online engineering support

Develop your solution faster than ever.

Add intelligence to your design with our edge AI technology

From smart cameras and edge AI boxes to autonomous machines and robots, your opportunities to design with embedded intelligence are endless. Be inspired to build something great with our solutions for edge AI by exploring a collection of embedded AI projects from Neurulus and developers from our third-party ecosystem.

- Image classification

- Object detection

- Semantic segmentation

- Simultaneous multiple inference

Explore smart camera projects:

- Stereo vision depth

estimation

- Environmental awareness using deep learning

- 3D obstacle detection using computer vision and deep learning

- Deep learning based visual localization

Explore autonomous machine and robot projects:

Don't sacrifice power or cost for high-speed AI

Our blueprints for edge AI have a unique

heterogeneous

architecture that enables high-speed AI and

helps you reduce

system power and cost. The high-levels of

integration, including

deep learning and computer vision accelerators,

security

enablers, and optional microcontrollers for

functional safety

applications, let you optimize AI performance

for every watt and

dollar spent.

Deep learning acceleration

The combination of our highly efficient work on

digital signal

processor and Matrix Multiplication Accelerator

delivers industry-leading

embedded deep

learning inference.

Computer vision acceleration

Computationally-intensive vision and multimedia

tasks are

offloaded to specialized hardware accelerators,

yet remain

configurable, through industry-standard

application programming

interfaces (APIs).

Smart memory & bus architecture

A large internal memory combined with a

nonblocking

high-bandwidth bus interconnect lets you take

full advantage of

our AI acceleration work and reduce

double-data-rate instances

in your system.

Functional safety & security

Integrated functional safety features, such as

self-test and

error correction code, combined with

cryptographic acceleration,

hardware safety mode-ready hardware and secure

boot help you

create resilient and secure edge AI

systems.

Imagine the possibilities with industry-leading deep learning inference

One of the industry's highest

fixed-point

embedded deep learning inference

opens the door

to a world of

possibilities.

Work with us to fine tune your AI model performance

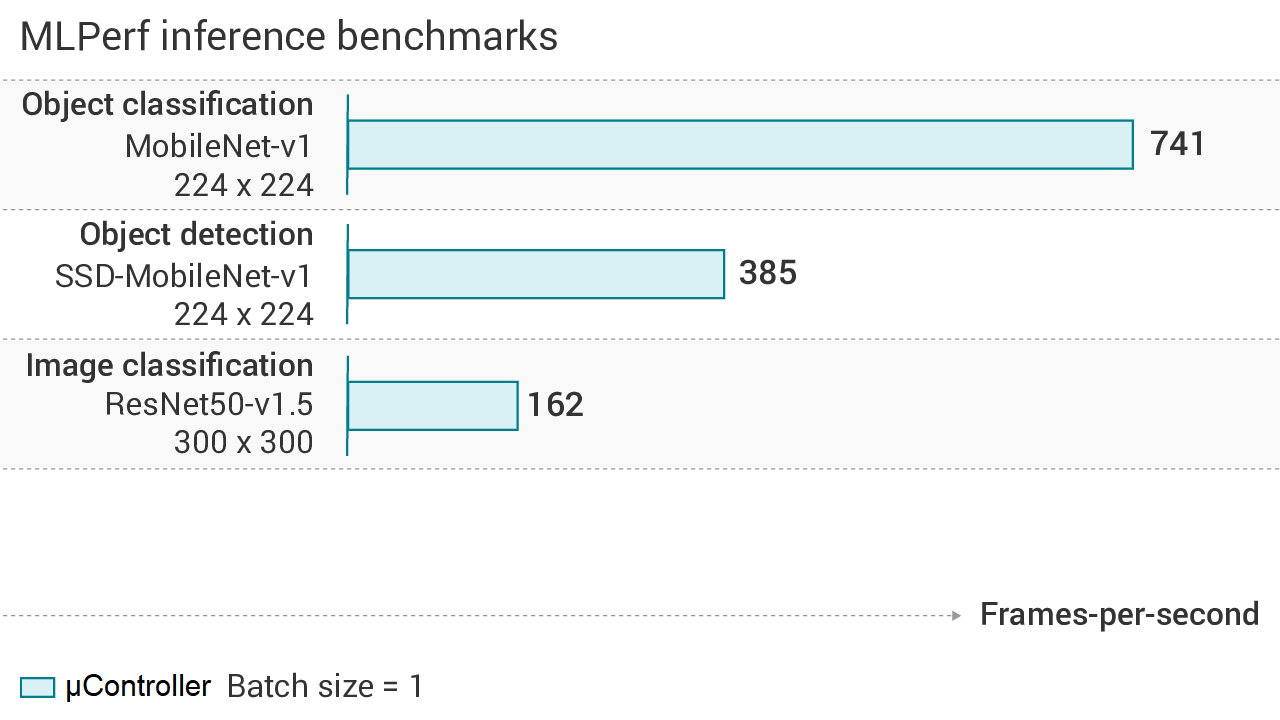

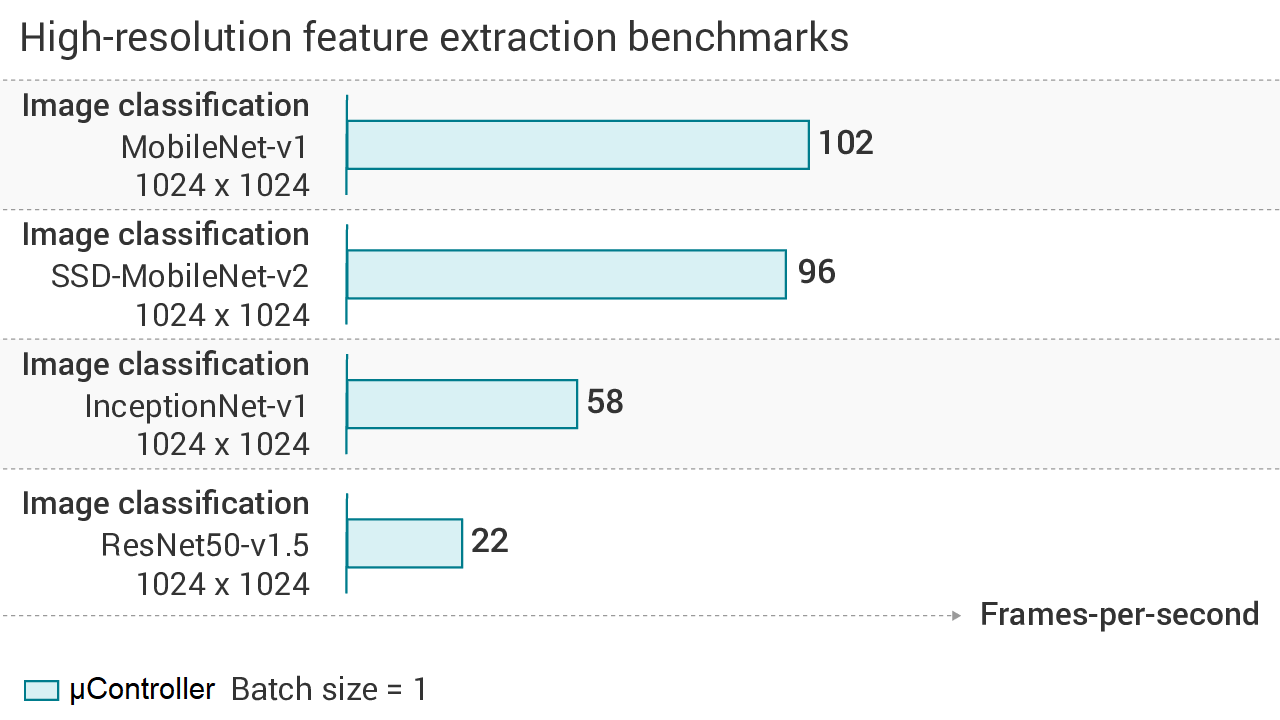

Use our AI software BSPs to get latency, frames-per-second processing, double-data-rate (DDR) bandwidth and accuracy for your deep learning model.

Accelerate your AI tasks with industry-standard APIs

Automatically unlock the full potential of the state-of-art devices and accelerate your deep learning, imaging, vision, and multimedia tasks with our Edge AI software development environment and BSPs. Explore demos for smart cameras and edge AI boxes created by us and third party hardware and software vendors in our Edge AI ecosystem.

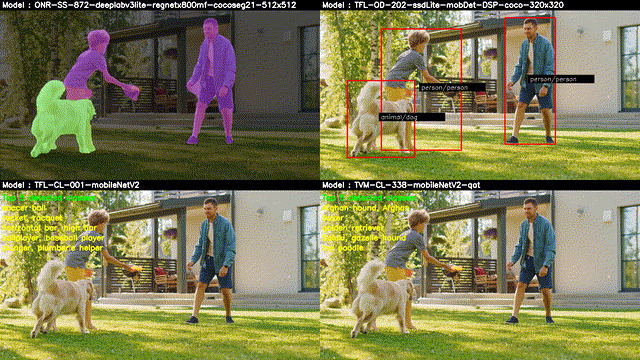

Image classification

Evaluate the performance of AI accelerators on controllers and processors using different pre-compiled TensorFlow Lite, ONNX, or TVM models and BSPs to classify images from USB and CSI camera inputs, as well as H.264 compressed video and JPEG image series.

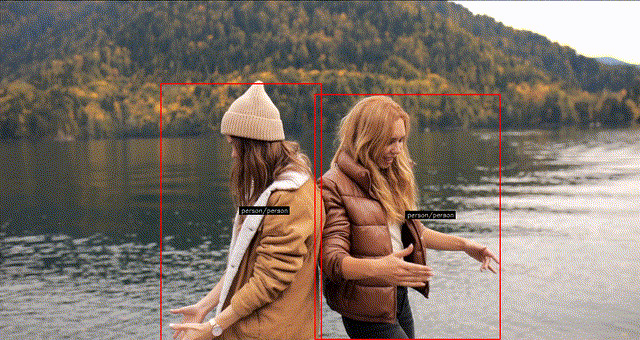

Object detection

Evaluate the performance of AI accelerators on controllers and processors using different pre-compiled TensorFlow Lite, ONNX, or TVM models to detect objects. Input sources include USB and CSI camera inputs, as well as H.264 compressed video and JPEG image series.

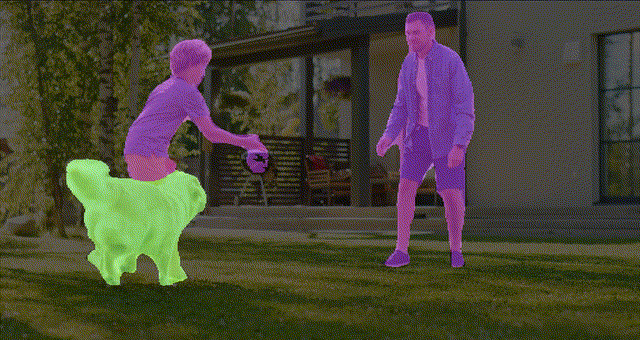

Semantic segmentation

Evaluate the performance of AI accelerators on controllers and processors using different pre-compiled TensorFlow Lite, ONNX, or TVM semantic segmentation models. Input sources include USB and CSI camera inputs, as well as H.264 compressed video and JPEG image series.

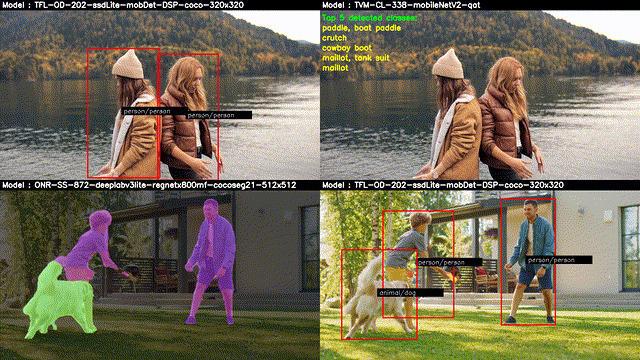

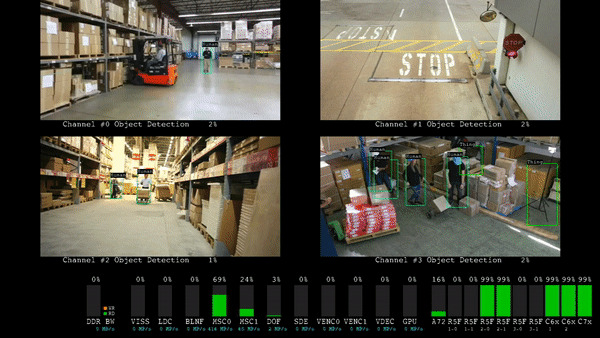

Single input, multi-inference

Use the data flow for pixel-based image classification, object detection and semantic segmentation in a frame from a single input source. The outputs are overlaid on the input image individually and displayed using a mosaic layout. The single combined output image can be displayed on a screen or saved to H.264 compressed video or a JPG image series.

Multiple input, multi-inference

Use the data flow for two input sources passed through two inference operations—image classification, object detection and semantic segmentation. The outputs are overlaid on the input image individually and displayed using a mosaic layout. The single output image can be displayed on a screen or saved to H.264 compressed video or a JPG image series.

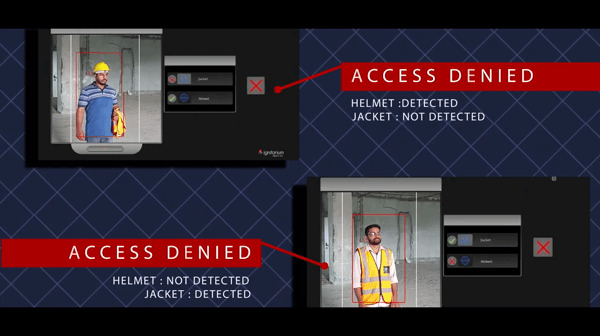

Protective equipment detector

Use an AI-based object detection solution for detecting specific types of personal protective equipment (PPE), such as jackets, helmets, gloves and goggles. The solution supports in-field trainable mode, allowing new types of PPE to be trained on the same inference hardware.

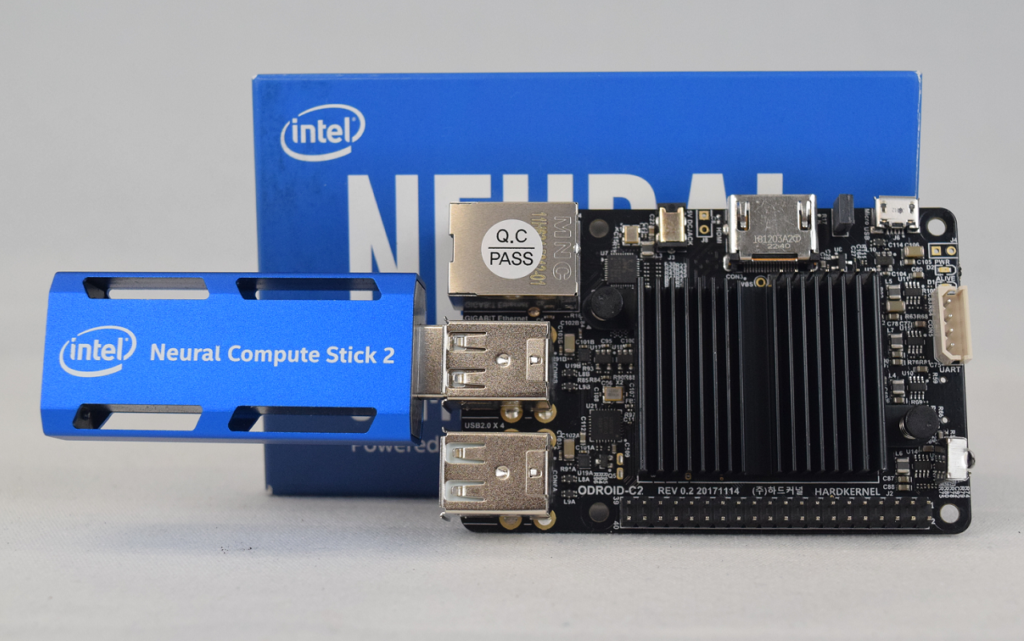

ML Edge deployment and inference

This edge device kit provides step-by-step instructions on using AI and IoT to orchestrate the deployment of a pre-trained and optimized object counting ML model to the edge device, run inference and send inference results to IoT Core.

Sensor fusion data acquisition system

Our Sensor Fusion Data Acquisition System is the exemplary data mining platform for Edge applications. This solution records data in high fidelity without sacrificing storage space, and greatly reduces human error due to its rule-based automated recording capability.

Explore demos for autonomous machines and robots created by Texas Instruments and third party hardware and software vendors in the TI Edge AI ecosystem.

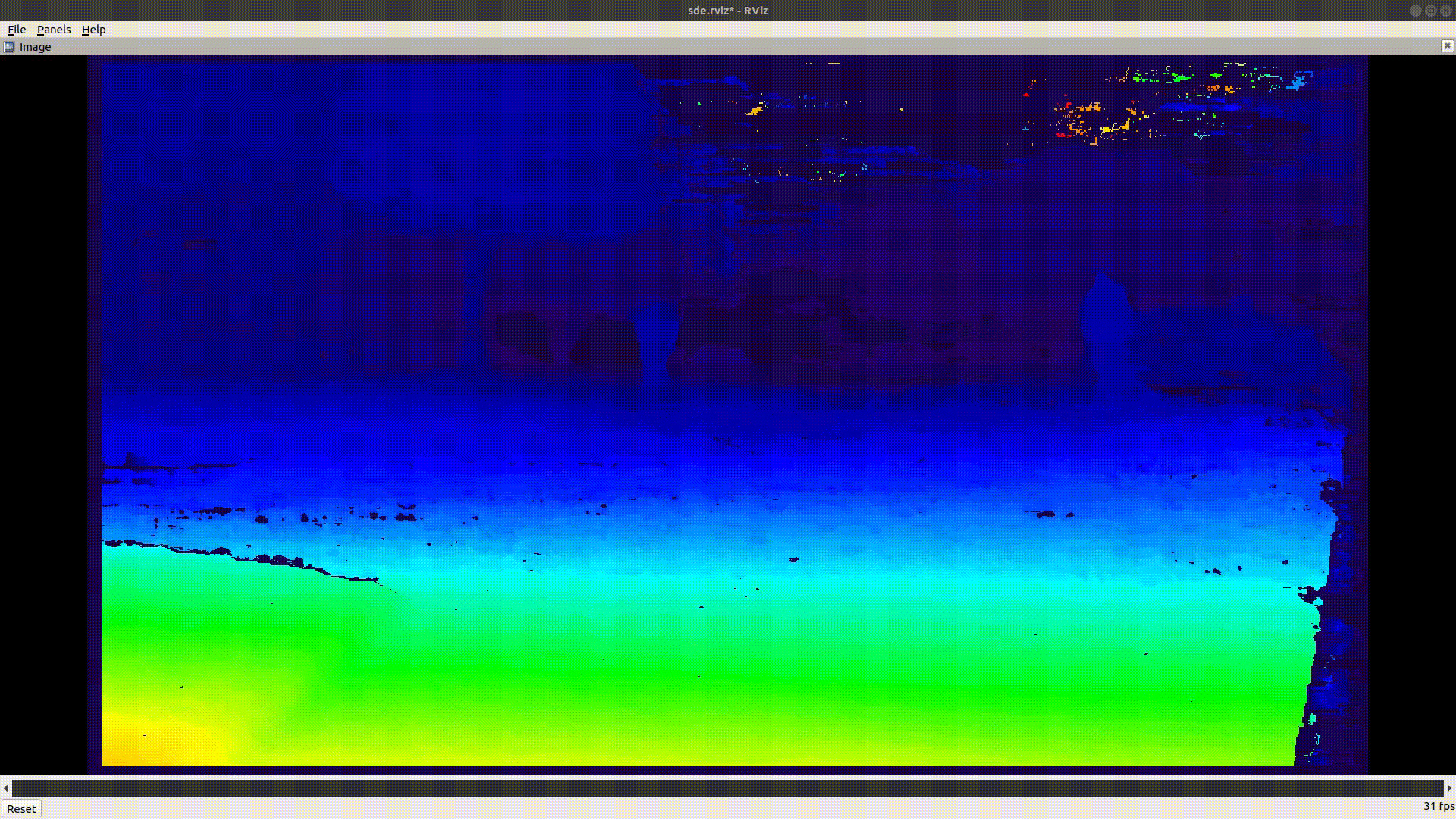

Stereo depth estimation

This Robot Operating System (ROS) applies hardware accelerated stereo vision processing on a live stereo camera or a ROS bag file on edge Processor. Computation intensive tasks such as image rectification, scaling and stereo disparity estimation are processed on vision hardware accelerators.

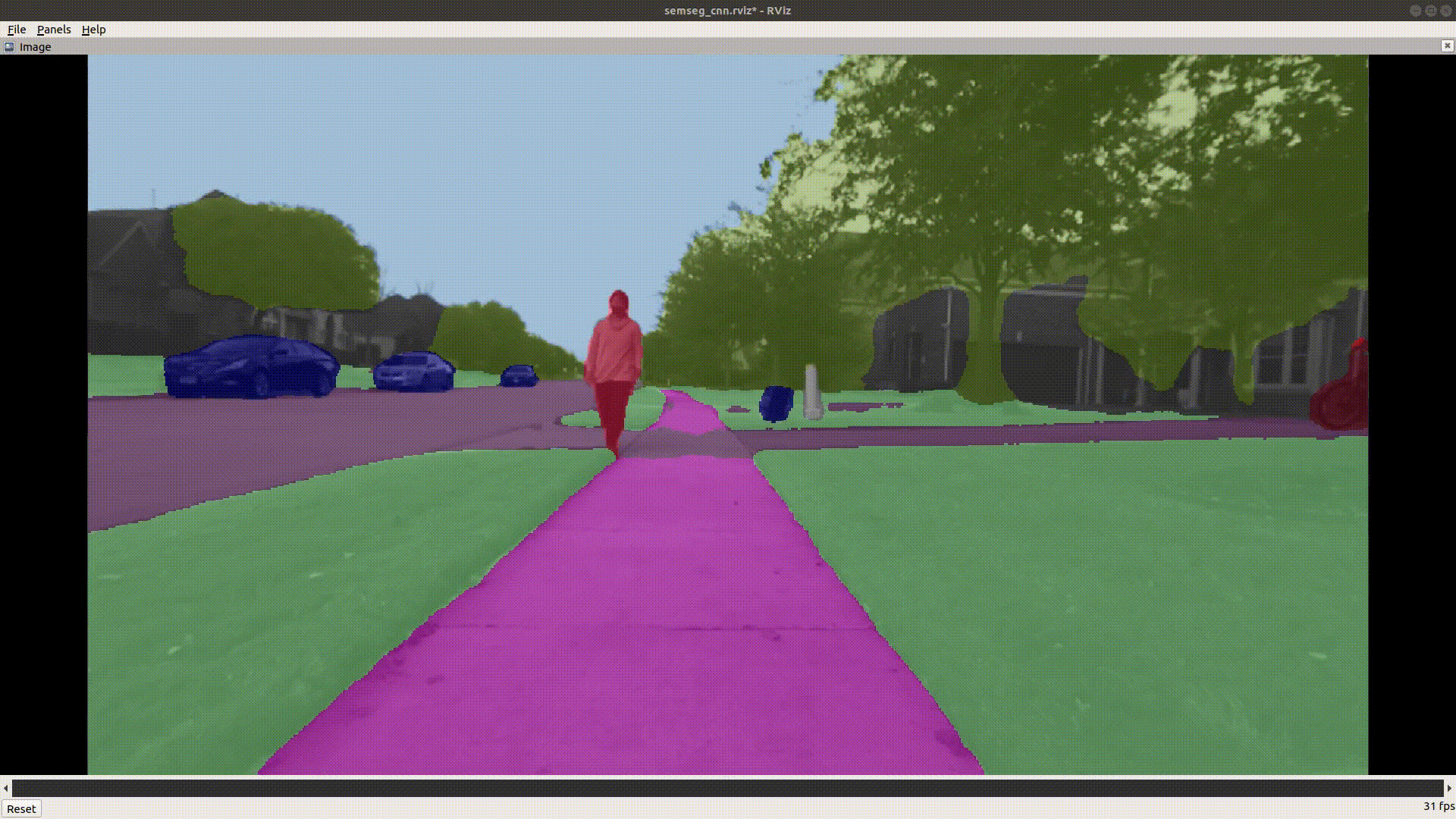

Environmental awareness

This Robot Operating System (ROS) applies a hardware accelerated semantic segmentation task on live camera or ROS bag data using the edge processor. Computation intensive image pre-processing tasks such as image rectification and scaling happen on vision hardware accelerator VPAC, while the AI processing is accelerated on deep learning accelerator.

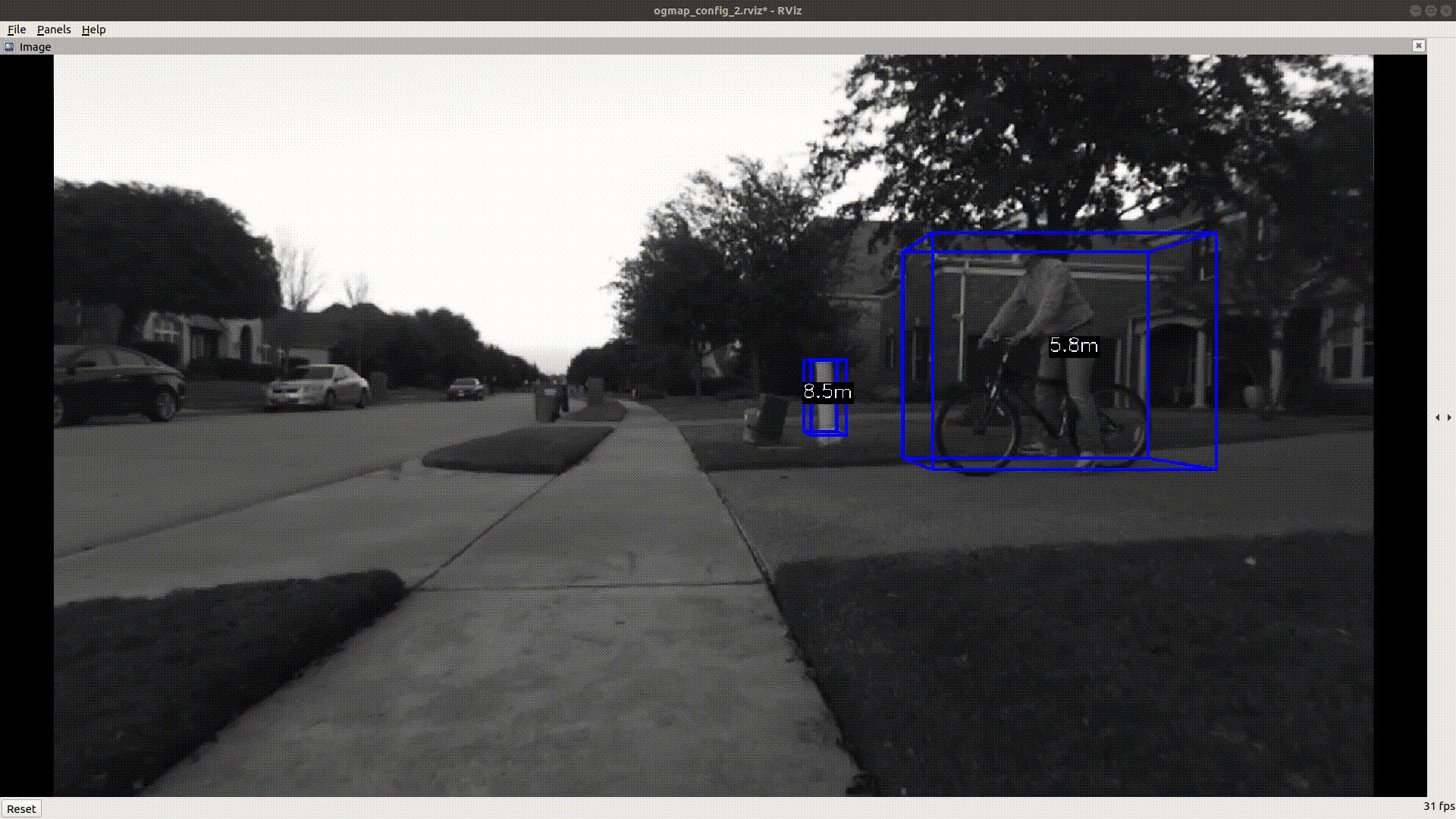

3D obstacle detection

This ROS applies hardware accelerated 3D obstacle detection on a stereo vision input. The hybrid computer vision and AI based demo utilizes vision hardware accelerators on edge for image rectification, stereo disparity mapping and scaling tasks, while AI-based semantic segmentation is accelerated on deep learning accelerator.

Visual localization

Use hardware accelerated ego vehicle localization, estimating 6-degrees of freedom pose. It uses a deep neural network to learn hand computed feature descriptor like KAZE in a supervised manner. The AI and CV based demo utilizes deep learning hardware accelerator and other cores to run the task efficiently, while leaving the CPU cores completely free for other tasks.

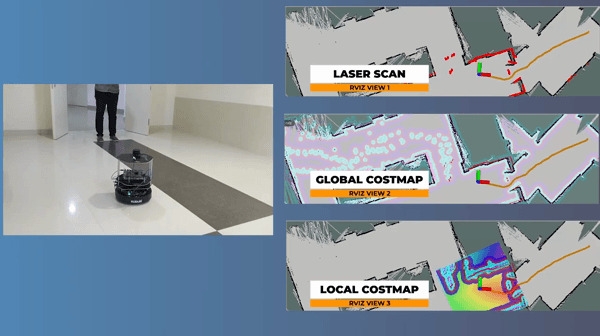

Autonomous navigation with 2D LIDAR

Use our Autonomous Navigation of a Turtlebot 2 on a predefined map built using the Gmapping SLAM package. It uses the AMCL algorithm to localize itself on the map and navigates between two endpoints with a path generated by the global planner and avoids obstacles using the local path planner.

Autonomous navigation with 3D LIDAR

Use our 3D Lidar SLAM with loop closure, 3D objection detection model on point cloud data and object collision algorithm running on a safety MCU to engage emergence safety stop when an object crosses into the robot’s C-Space.

Contact us for our custom AI BSPs today

You can remotely connect to our edge team and use the edge processor evaluation board to evaluate our deep learning software development environment with Edge AI Cloud. Talk to us today with free expert consultation.

Explore third party devices in our edge AI ecosystem

| Third party | Specialization | Solutions |

|---|

| Third party | Specialization | Solutions |

|---|---|---|

|

Phytec |

System-on-module (SOM) |

Learn more |

| Allied Vision | Camera and sensor | Learn

more |

| D3 Engineering |

Camera, radar, sensor fusion, hardware,

drivers and

firmware |

Learn more |

| Ignitarium | AI Solutions and robotics | Learn more |

| RidgeRun | Linux development, Gstreamer plugins and AI applications | Learn more |

| Kudan | Simultaneous localization and mapping (SLAM) | Learn more |

| Amazon Web Solutions | Cloud Solutions for machine learning, model management and IoT | Learn more |